The Quest For Exceptional Software Quality

Written by Ned Kremic

"or how to achieve the outstanding software quality"

What is quality?

Probably the last thing that will come to your mind would be the actual definition of quality.

Most likely you will think about synonyms of quality: Lexus, Swiss Watch, iPod/iPhone/iPad (Apple products) or something similar?

The Quest for Chronometric Precision

Rolex, the fine piece of Swiss jewelry. The first wristwatch awarded the class “A” precision certificate. It has been passed from generation to generation, and is still ticking today with precision to 1 sec!

Yes, it takes years to build the renowned brand name, that everyone associates with the innovative products with exceptional quality.

Needless to say that those products fulfill their intended functionality and are free of defects. The name implicitly says it all. The Rolex wristwatch is synonymous for precision and quality

The Quest for Software Precision and Quality

So, if those products, some of them centuries old, are associated with an exceptional quality, why new technology like software is still plagued with numerous issues and defects, despite all advancements in software development and quality assurance?

The production incidents are the fact of life in software industry costing sometimes millions of dollars, disruption of services and creating the negative brand image that can be disastrous for the company.

What has happened to us?

Is the culprit in the way how we do testing and quality assurance?

Let’s briefly look back how the software has been traditionally built and tested:

1970: Winston W.Royce presented the “waterfall” model (Requirements, Design, Implementation, Verification, Maintenance)

2012: 42 years after, the majority of software projects are still testing quality after a product has been built.

What others have been doing

This sounds really strange, as the other major manufacturing industries such as automotive, have long ago shifted away from the traditional quality assurance model, following Japanese reputation for building the high-quality products.

The Toyota Production System known for following the "kaizen" continuous improvement philosophy, has made the major impact on the world scale, forcing the other car manufacturers to also change their quality principles and philosophy.

Therefore, if other industries have successfully implemented quality approaches such as:

- "kaizen" - continuous improvement

- “muda” - eliminate the waste and identify activities that add value

- “kanban” - lean and just-in-time production

why majority of software projects are still using 42 years old quality process? What happened with the continuous improvement in software quality process, despite the continuous improvement and advancement in software technology!?

Can we learn something from Taiichi Ohno the father of the Toyota Production System and Edward Deming who has made a significant contribution to Japan's reputation for innovative high-quality products?

Of course we can, and the best of all we don’t have to innovate or try something new. We just need to break free from old “continuous non-improvement” quality process and follow, already proven principles that other industries have successfully implemented.

Although there are numerous examples of successful application of above mentioned principles, here is my quality story, “how to make the software equivalent of the swiss watch”!

How we plan to achieve that goal?

Well, we are going to use the best principles that Taiichi Ohno and Edward Deming have taught others over the last few decades.

First, we will apply Deming principle:

"Rather than trying to test quality after a product has been built, we build quality into the process and product as it is being developed"

and we will go one step further:

We are not going just to move the test into the development process, rather we are planning to use test to drive the development process!

How do we implement the Deming principle in software development process?

Agile answer to this question is to integrate testing right into the development process, not after!

Therefore typical Agile (XP, Scrum) team has both the developers and testers working alongside each other, and mature agile teams are having pairing not just between developers (pair-programming), but pairing between developers and testers as well.

And if testers are integrated into development team what level of testing do we do?

“Rather than trying to test quality after a product has been built, we build quality into the process and product as it is being developed”

We are not going just to move the test into the development process, rather we are planning to use test to drive the development process!

This is valid question, especially considering that today there are many levels of testing. Except the unit testing, which unfortunately most of teams are not doing, or are doing too late when their code has been written, all others are done after the development phase (code freeze).

- Unit Tests

- Integration Tests

- Functional Tests

- Performance Tests (PET)

- User Acceptance Tests (UAT)

Which ones do we do in agile teams?

Short answer for Scrum: All of them! All of them that are applicable for small incremental peace of functionality that we are delivering in this particular iteration. And they are team’s responsibility.

Although that approach may be feasible for smaller projects, or even large projects where all teams are co-located, it may be impossible for large enterprise projects where development teams are spread worldwide, in different time zones, and outsourced to other companies.

It is hard or impossible to do the integration testing within the same iteration, due to dependencies and other factors. However there are numerous ways and successful agile examples how to apply “the Deming principle” and integrate quality right into the development process even for the enterprise level projects.

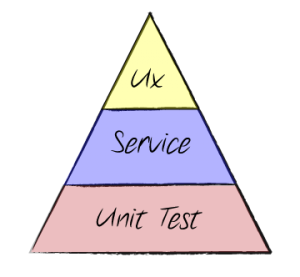

To simplify the fact, we will reshuffle and re-group the testing layers into the three distinct groups:

- User Experience Tests: The system as seen from user perspective

- Service Level Tests: The system under the hood

- Unit Tests: Nuts and bolts of the system

Why only three levels, and why such grouping?

The best way to answer “why” is to visualize. As software is a intangible system, the best way to explain is using the analogy with something, that everybody is familiar with - car

User Experience Tests: The system as seen from user perspective

This is the view of the “system” from the end user perspective

Picture says it all. Imagine yourself doing the “test drive” of this car! You can easily come with 10,20,100+ test criteria you would like to see in this car. Design, color, comfort, speed, acceleration, braking distance, ABS, traction control e.t.c

When you see and approach this car for the test drive, and want to check the acceleration time from 0-100 km/hr, you probably don’t care whether it has I6 or V8 engine, and whether the engine block was built from stainless steel or ceramic.

You are testing only the “user experience”.

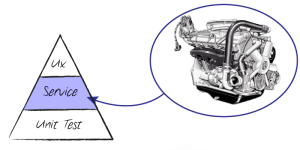

Service Level Tests: The system under the hood

However most of the complex systems are much more than what meets the eye. Average system is like the iceberg, where only the smaller part is visible and accessible to the end user. The rest is under the water, hidden. But that is the part of the system that carries most of the functionality

Back to our our car model.

This is the view of one module of the system “under the hood”.

Many software practitioners would think that it represents the traditional middleware, but it is not. The service level testing represents all system components, which when integrated provide the desired functionality to the system. Therefore for traditional complex multi-tier system, front-end (presentation-tier), middleware (application-tier) and backend (data-tier), the service level testing would be the testing of all modules within all three tears.

In Agile we intentionally do not want to separate testing along the horizontal layers, such as multi-tier system layers. Rather we try to test user story which represents the flow from end to end (vertical slice of cake). The only way to do this is to treat all system components as part of the service level.

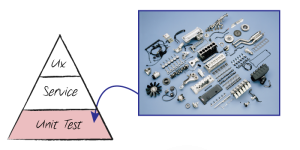

Unit Tests: The Nuts and Bolts of the System

This is the view of the integral pieces (nuts & bolts) of the “system” from unit test level perspective.

It would be silly to put the iron bolts, plastic pistons and paper sealers, assemble the engine and test the engine on the test-console.

Even sillier installing the engine in the car, and then testing it with the end-to-end tests after everything is done!

Sooner or later we will find the obvious deficiencies, but fixing them would be too costly

Unfortunately we do this with most traditionally built software systems.

The best way is to test each “unit” separately with the unit test.

Back to our original example: The bolt that connect the engine block with the engine head.

It is much simpler to test, than correct, than retest again - iteratively, bolt simulating the high-heat, high-stress environment, rather than running the car for 3-4 hours on 5,000 RPM to generate the same testing conditions.

In most cases end-to-end testers will not be able to reproduce such tests on the final product, and if unit testing is not done, somebody, sometimes, somewhere will, and incident will happen. With car, it may not be such a big problem. But with airplane, it would. Not doing that level of testing may be disastrous.

Same applies to software system, except that in software industry we have advantage: creating those unit tests and simulating the extreme exceptional conditions are very simple and cheap, and unit tests are quite effective for that!

The Test Automation Pyramid

At the end of each iteration agile teams are delivering the “potentially shippable product increment”, which is fully tested. Fully tested means, that all the new features developed in the current iteration have been tested, as well as the entire regression suite for the product developed so far has been run.

This looks like a snowballing. The snowball is getting bigger and bigger, after each roll (iteration).

Because of incremental and iterative development, and the fact that the full regression suite must be run at least once per iteration, we don’t have other choice than to automate!

Let me put this bluntly: In Agile, automation is not optional!

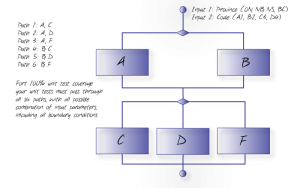

Now that we have determined that we test each user story (vertical slice of cake), on the three levels of testing, and also that we have to automate, it is important to visualise this process.

The best visual explanation is Mike Cohn’s “Test Automation Pyramid”.

If there is one thing I would like you to remember from this article, than it is to memorize the composition of this pyramid:

- The fact that we test and automate on three testing levels

- The position of each level, where the unit test is the foundation of the pyramide

- The relative size of each level, which depicts the relative level of the test coverage

- The major role that developers have, in order to ensure the exceptional quality of the product

I can’t stress enough its importance for your development process to ensure that you are getting the software quality of the fine swiss watch!

Top Level of the Pyramid: User Experience Testing (end-to-end E2E Testing)

Ux (User Experience) testing is commonly known as the end-to-end (E2E) testing. It combines both the functional UI testing as well as the User Acceptance (UAT) testing. Traditionally both functional and UAT tests are performed via the user interface (UI) of the integrated product.

That is the level of testing that most “waterfall” QA departments are executing, because their only access to the system is via UI.

It is important to note that it represents the top of the test pyramid, and its coverage is relatively small comparing to the other two levels.

Yes, the E2E testing may have several thousands of test cases covering the wide range of user acceptance and functional tests, but the focus is still on very high business level, as seen from the perspective of the end user.

There is no point testing every boundary and exception conditions from this layer.

As a matter of fact it is almost impossible to simulate all exceptional conditions from this layer.

However testing the system without exception test cases is the recipe for disaster.

Therefore execute those tests in service or unit tests levels, were simulating those conditions and automation of those test cases is simple.

No software system could possibly envision every scenario that the real user is going to execute on the live system and because of that this level of testing alone is insufficient for achieving the exceptional quality.

Middle Level of the Pyramid: Service Level Testing

This is the view of the system “under the hood”.

This view is much more focused on the individual service module functionality, therefore test coverage must be higher.

At this level, testers are having the access to each individual service, or combination of few services if the user story under test represents the flow.

The possibility to trigger the wide range of success and fail scenarios from this level is much higher than from the Ux level.

The service level is the base for the Ux level, and as shown in testing pyramid covers the bigger area, hence much bigger test coverage.

The service level tests must be automated.

The automation on this level should provide assurance that no regression is introduced into the system after the initial implementation.

To run the regression tests only against the system under development, all dependant back-end systems must be mocked. Any test failure will indicate that the regression has been introduced in the system under our control.

To run the E2E regression, the automation should run the full regression against the live backend system. Depending on the complexity of the entire system, such automation may be extremely complex, if environments are changing (data) across different releases. Automation in this case must be able to programmatically find the new set of test case data in the live-backend, populate test case requests and execute them.

Both User Experience and Service level tests are ensuring that the user will get “what she really wants, not necessarily what she has asked for”, therefore both levels are representing the acceptance tests, and those are usually tests that the product owner will use to accept the user story. It is also advisable that the product owners (POs) write the acceptance tests. The acceptance level tests are usually done by the agile team testers.

Base of the Pyramid: Unit Testing

The unit testing represents the view of the integral pieces of the “system”.

Unit testing as depicted in the test pyramid represents:

- The base of the pyramid - unit tests ensures that the system is “unbreakable” and provide the solid support for the service and Ux level of testing

- The largest area of the pyramid - The largest test coverage

Unit testing is developer responsibility. Unit test represents the view of each nut and bolt of the system. Because developers have access to each internal peace of the software, they are able to provide the total test coverage.

The unit tests do not necessarily consider the business test cases, which are mostly covered in acceptance level tests (Ux and Service Tests), rather on testing the “raw functionality” of each software component.

Unit test must be isolated from the dependant objects and automated.

The major point of unit level testing is to ensure that the system is “unbreakable” and “bulletproof”!

That is why Unit Testing represents the foundation of the testing pyramid.

Therefore contrary to common belief that QA ensures the quality of the software, the major contribution should come from developers!

| “You cannot add the quality to the product later, rather improve the process and build the quality into the product in the first place” ~W.EdwardsDeming |

| W.EdwardsDeming best known as the major contributor to Japan’s reputation for innovative high-quality products, and who is regarded as having had more impact upon Japanese manufacturing and business than any other individual not of Japanese heritage |

Unit Test Formula

The Automation Test Pyramid clearly indicates the importance of the unit tests for quality of the product. The unit test is the foundation of quality, and at the same time provides the largest test coverage, perhaps as large as the service and Ux testing combined.

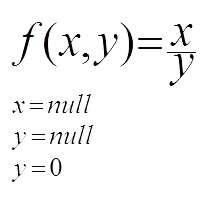

The following “unit test formula” is a guiding principle for developers:

This simple formula, gives clear answer to every developer that testing only the "happy path" (which is division between two numbers in this sample case) is not enough. The point of unit testing is to explore all negative paths and test each abnormal and boundary condition. It is irrelevant in which business context this formula will be used (ATM machine, healthcare, telecom), the important thing is that developer tries to find the way to crash "the system" and at the same time ensure it will not happen in production under any circumstance.

Therefore, developer must simulate conditions when either x or y are not defined (x=null, or y=null), as well as y=0 (division by 0).

Those are the conditions that are major causes for system to crash in production, causing incidents, financial loss and creating highly visible bad image.

There is nothing simpler than simulating those test cases in any unit test framework, but you will be surprised how many developers do not test those cases, and are finding all kind of excuses for not doing so. Some are claiming that it is ridiculous to test all parameters, that validity of parameters must be ensured by dependant system that is providing data, and so on.

Therefore if you don’t, someone will somewhere, sometimes execute that scenario, which will cause our system to crash, create the highly-visible incident and cost the company millions of dollars because of disrupted service and giving it bad quality reputation.

Why, when unit testing is simple, cheap and effective way to prevent this !!!???

Unit testing is considered the “best practice” in software industry for over a decade now!

And as the old quote says: “The chain is only as strong as its weakest link”, in software language it may be translated as “The software system is only as strong as its unit test coverage”

Attention to small details like this is what distinguish the quality of the genuine swiss watch from its copycats, or Lexus and most of the other car brands.

As Rolex history explains:

The relentless quest for chronometric precision, rapidly led to success!

Writing the Effective Unit Test Cases

The deeper your unit test can zoom into your software system, the better quality you will achieve.

Refactoring is the way of life in agile. We constantly change the code, therefore automation of the regression tests is not optional.

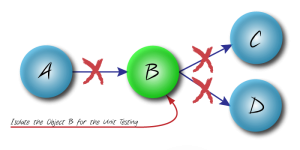

The effective unit test, tests only one unit, not its surrounding. Therefore isolate it!

Isolate the object"B" by cutting the connections with the dependent objects.

Mock the objects "C" and "D", and simulate requests from the object "A".

Now zoom into the object "B" and find its algorithm:

Effective unit tests should run each path with all possible combination of input parameters:

- Enums: iterate through all enumerators

- Variables: Execute normal and boundary data

Writing the Effective Acceptance Test Cases

In order to ensure the top quality of each delivered user story, the team must understand what the product owner really wants, not only what she has asked for!

That means that each developer, tester, analyst or UI designer in the team that will be working on that story must communicate with the product owner, not just during the planning session, rather during the entire iteration.

After live conversation and understanding what the product owner really wants, developers will design and implement the functionality that will deliver this story.

At the same time, agile QA will define the test cases. Defining the test cases is an art!

Better the artist, better the quality of your software!

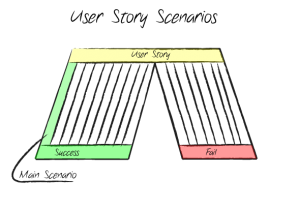

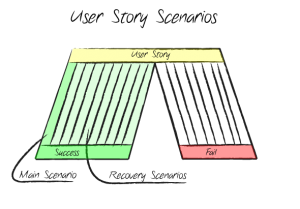

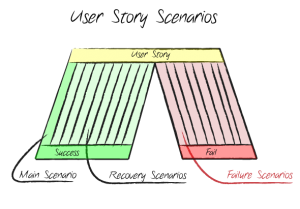

User story is not the use case, however their similarity is striking. One of the best ways to analyze and understand the user story is to use Alistair Cockburn’s “striped trousers” technique.

A user story, similarly as the use case is the collection of scenarios.The belt of the trousers is the user story goal that holds together all scenarios.

Each business scenario represents one test case.

Distinct collection of test cases is a test suite.

There are two types of scenarios: Success and Fail.

We can group success set of scenarios as one (left) sleeve of the trousers, and failed (right) in the other one.

The Main Success Scenario - Happy Path

Scenario where everything goes right from the start to the end and user’s goal is satisfied is called the main scenario, which is commonly known as the happy path.

The main scenario is the leftmost scenario.

Main scenario example (ATM Banking Machine)

User goal is to withdraw $100 cash from the ATM. User puts the banking card into the ATM, punches her PIN, selects the Chequing account and request withdrawal of $100.

ATM dispenses $100, prints the receipt and returns back to the user the banking card.

User walks away satisfied with $100 in her wallet.

Recovery Scenarios

There may be bumps on the road to success, but the system will satisfy user goal. The other paths that lead to success are the “recovery scenarios”.

All recovery scenarios are part of the Success Sleeve of the striped trousers.

Recovery scenario example #1 (ATM Banking Machine)

User goal is to withdraw $100 cash from the ATM. User puts the banking card into the ATM, and punches the incorrect PIN. ATM responds that the PIN is wrong. User punches the correct PIN, selects the Chequing account and request withdrawal of $100.

ATM dispenses $100, prints the receipt and returns back to the user the banking card.

User walks away satisfied with $100 in her wallet.

Recovery scenario example #2 (ATM Banking Machine)

User goal is to withdraw cash from the ATM. User puts the banking card into the ATM, punches her PIN, selects the Chequing account and request withdrawal of $1,000.

ATM responds that her daily withdrawal limit is $500. User requests withdrawal of $500. ATM dispenses $500 (25x$20 bills), prints the receipt and returns back to the user the banking card.

User walks away with $500 in her wallet.

Fail Scenarios

All paths that lead to goal abandonment are the “fail scenarios”

All fail scenarios are part of the Fail Sleeve of the striped trousers.

Fail scenario example #1 (ATM Banking Machine)

User goal is to withdraw $100 cash from the ATM. User puts the banking card into the ATM, and punches the incorrect PIN. ATM responds that the PIN is wrong. User punches the incorrect PIN three more times. ATM responds that the max number of PIN attempts has been reached and that user should call customer care to obtain the new PIN. ATM returns the banking card to the user and blocks the withdrawals from that banking card.

User walks away without $100 in her wallet.

The goal of agile QA is to define all scenarios (test cases) for the given user story.

If identifying or executing all scenarios is not possible or not feasible, the user story is not good!

It is either not properly defined with clear acceptance criteria, or it is too big and number of test cases is large to be defined and executed in one iteration (2-4 weeks)

The best way to define the user story where all test cases could be defined and executed is to follow the INVEST criteria for writing the user story:

I – Independent

N – Negotiable

V – Valuable

E – Estimable

S – Small

T - Testable

If for any reason, your story is declared “done” and “accepted” without satisfying all defined test cases, you are opening the dangerous quality hole to your system. Eventually, somebody will somewhere execute that particular scenario and the system may crash causing potentially big losses for the company.

Why? Why simply not INVEST in a good user story?!

If you promote this quality culture in your agile team and organization, where each member is responsible and owns the quality of the product, where skipping or missing the test cases is not acceptable, you are on the good track to create the software that will match the quality of the “fine and genuine swiss watch”.

Anything else, that compromises the quality, and your software will be equivalent of the “fake aftermarket $10 watch”.

You choose what you want, and what reputation you want for your product, your team or your company!

And if compromising quality is acceptable due to time to market pressure, think twice.

Steve Jobs took Apple back on the brink of collapse, and turned it into the company with the bigger market capitalization than the Microsoft, Oracle or IBM. Steve has never compromised with quality vision of the Apple products. Compare the smooth and slick touch-screen scrolling experience introduced in the first versions of iPhone, with experience from its copycats that came to the market after the iPhone, including the newest generation of touch-screen GPS devices!?